General

AI-Generated Game Assets and Copyright Liability: The Studio Ghibli vs Call of Duty Dispute

Table of Contents

Introduction

In November 2025, Activision Blizzard’s Call of Duty: Black Ops 7 became involved in controversy following widespread allegations that the game incorporated AI-generated artwork mimicking Studio Ghibli’s distinctive aesthetic in its ‘calling cards.’ These are collectible backgrounds earned through gameplay or purchases.[1] The franchise, which generates over $1 billion annually,[2] faced immediate backlash from players, artists, and policymakers when social media users identified calling cards bearing unmistakable characteristics of AI-generated Studio Ghibli-style imagery. This includes the soft watercolour backgrounds and whimsical character designs synonymous with the legendary Japanese animation studio.[3]

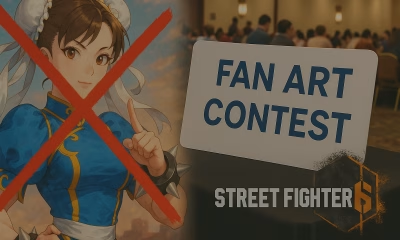

This argument represents what commentators have termed a ‘watershed moment’ in the intersection of artificial intelligence, intellectual property rights, and consumer protection law.[4] Unlike previous instances where AI use in gaming remained subtle or deniable, the Black Ops 7 calling cards were overtly styled in a manner unmistakably reminiscent of Studio Ghibli’s work. This aesthetic had proliferated across social media following OpenAI’s March 2025 release of image generation capabilities.[5] This analysis examines the legal perspective of Activision’s conduct, focusing on copyright and intellectual property implications, broader industry consequences, and the regulatory landscape governing AI-generated content in commercial products.

Copyrightability of AI-Generated Works

A foundational issue concerns whether AI-generated calling cards receive copyright protection. United States copyright law protects only ‘original works of authorship’,[6] and the US Copyright Office has consistently held that copyright requires human authorship.[7] In the January 2025 Report on Copyrightability of AI Outputs, the Copyright Office reaffirmed that works created autonomously by AI systems without meaningful human creative input cannot be copyrighted.[8] The Copyright Office refused registration for artwork generated by combining a base image with a style image through AI, determining that the AI system was ‘responsible for determining how to interpolate the base and style images’.[9]

Activision’s Steam disclosure states that ‘our team uses generative AI tools to help develop some in-game assets.’[10] But this vague phrasing provides insufficient detail to establish the extent of human authorship. If the calling cards were generated predominantly through AI prompting without substantial human creative input beyond text prompts and basic selection, Activision likely lacks copyright protection over these assets. This creates a significant vulnerability: the company charged $70 for a premium product containing potentially unprotected content existing in the public domain. Consumers purchasing cosmetic items reasonably expect original, legally protected artwork created by human artists, not AI-generated content that any competitor could freely reproduce.

A new question arises with concerns that Activision’s AI-generated imagery infringes Studio Ghibli’s copyright. Copyright law protects specific expressions but not general artistic styles or aesthetics because allowing monopolies over styles would stifle creativity. Additionally, it could grant the first creator exclusive rights over entire categories of expression rather than just their own work. Here, Studio Ghibli holds copyright over its films, character designs, and specific scenes.

Yet, the main aesthetic, from the watercolour background and fantasy elements are not protected by copyright law in the US. Infringement may occur if AI outputs closely resemble specific copyrighted works. Courts apply the ‘substantial similarity’ test, examining whether an ordinary observer would recognize the allegedly infringing work as appropriated from the copyrighted work.[11]

Multiple ongoing lawsuits against AI companies, including Andersen v Stability AI,[12] allege that training AI models on copyrighted content without permission constitutes infringement. While AI companies argue such training falls under the fair use doctrine, courts have yet to definitively resolve this question.

Gaming community discussions consistently identified the calling cards as ‘AI slop’ and criticized Activision for using them rather than commissioning authentic Studio Ghibli collaborations.[13] This widespread recognition of the cards as imitations rather than genuine Studio Ghibli creations suggests transparency regarding origin, potentially precluding consumer confusion necessary for trademark infringement.

Nevertheless, Activision arguably engaged in unfair competition by exploiting Studio Ghibli’s goodwill and reputation. By capitalizing on the popularity and recognizability of the Ghibli aesthetic without authorization or compensation, Activision trades on Studio Ghibli’s substantial brand value, conduct that, while potentially not constituting technical trademark infringement, raises significant ethical and potentially legal concerns about commercial exploitation of artistic reputation.

Industry Implications

The Black Ops 7 controversy catalysed urgent discussions about AI’s impact on employment in creative industries. Critics highlighted the profound irony: a franchise generating over $1 billion annually, produced by hundreds of workers across multiple studios, resorting to AI-generated artwork rather than compensating human artists. This raised fundamental concerns about AI deployment not to enhance creativity or support artists, but to reduce labour costs and maximize profits at the expense of human employment.

Activision’s approach sets a concerning precedent for the gaming industry. Black Ops 6, released in 2024, also included AI-generated calling cards featuring telltale signs such as six-fingered hands, the classic marker of AI-generated imagery.[14] However, these were relatively subtle and took months to confirm. The progression from hidden AI use to blatant Studio Ghibli-style imagery suggests a strategy of gradually normalizing AI content, potentially desensitizing consumers to its presence through incremental acceptance.

If this approach succeeds without significant legal or market consequences, other publishers will almost certainly adopt similar practices, accelerating AI adoption throughout the industry. The economic incentive is overwhelming: why pay human artists when AI can generate adequate content at negligible cost? This race to the bottom threatens to fundamentally transform game development from a human-centered creative endeavour into an AI-driven content production pipeline.

Conversely, the significant backlash and media attention could deter similar conduct. Players successfully obtained refunds from digital storefronts specifically citing AI-generated content as grounds, demonstrating that consumer protection mechanisms can provide meaningful recourse. This market response, combined with reputational damage, may incentivize greater transparency and restraint in AI deployment.

Activision’s Steam disclosure stating that AI ‘helps develop some in-game assets’ employs language minimizing AI’s role, suggesting AI merely assists human creativity rather than substantially or entirely generating content.[15] This vague disclosure, buried in store pages that consumers may not review before purchase, fails to provide meaningful notice about the extent of AI use in the product.

The Federal Trade Commission has confirmed that AI-generated content falls within existing consumer protection statutes and that nondisclosure of material AI use may constitute deceptive practices. The FTC’s August 2024 rule prohibiting fake and AI-generated reviews explicitly addresses AI-generated content, emphasizing that ‘AI tools make it easier for bad actors to pollute’ content ecosystems. While this rule targets reviews rather than in-game assets, it signals the FTC’s concern with AI-facilitated deception and willingness to extend consumer protection principles to AI contexts.

Emerging state laws reinforce disclosure obligations. New York’s Synthetic Performer Disclosure Bill requires conspicuous disclosure when advertisements include AI-generated performances,[16] and California has enacted legislation requiring platforms to label deceptive AI content.[17] Although these laws primarily target advertising, they reflect a broader regulatory trend toward transparency regarding AI use, a trend likely to expand into consumer products, including video games.

Best practices require clear, conspicuous disclosure at the point where consumers encounter AI-generated content, not generic statements buried in store terms. Companies should specifically identify which assets were AI-generated, the extent of human involvement, and any implications for quality or authenticity. Activision’s failure to meet these standards exemplifies the inadequacy of current voluntary disclosure practices and strengthens arguments for mandatory regulatory requirements.

Recommendations

The gaming industry urgently requires mandatory disclosure standards for AI-generated content. Activision’s vague Steam statement that AI “helps develop some in-game assets” exemplifies the inadequacy of current voluntary practices.[18] Companies should be required to provide clear, conspicuous disclosure at the point of purchase, specifically identifying which assets were AI-generated and the extent of human involvement. This disclosure should appear on store pages, in-game menus, and marketing materials where consumers make purchasing decisions, not buried in lengthy terms of service.

The Federal Trade Commission has confirmed that nondisclosure of material AI use may constitute deceptive practices under existing consumer protection statutes.[19] Emerging state laws in New York and California requiring disclosure of AI-generated content in advertising signal a broader regulatory trend.[20] Federal legislation should extend these principles to consumer products, establishing baseline transparency requirements across all digital marketplaces. Such regulation would empower consumers to make informed decisions while creating accountability for companies deploying AI to replace human creativity.

The fundamental copyright question surrounding AI-generated content demands immediate legal clarity. Courts must definitively resolve whether training AI models on copyrighted content without authorization constitutes infringement. Multiple ongoing lawsuits, including Andersen v Stability AI, challenge this practice, but the lack of settled precedent creates legal uncertainty that companies exploit.[21]

Studios like Activision should bear the burden of proving their AI systems were trained on licensed or public domain content, not copyrighted works scraped without permission. Legislative intervention may be necessary to establish that unauthorized use of copyrighted materials for commercial AI training constitutes infringement unless it clearly satisfies fair use criteria.

Additionally, AI-generated outputs that lack meaningful human creative input should be explicitly excluded from copyright protection, preventing companies from claiming ownership over content the Copyright Office has already determined falls into the public domain.[22] This would eliminate the perverse incentive to replace human artists with AI systems while charging premium prices for potentially unprotected content. Clear legal frameworks would protect both original creators whose work trains AI systems and consumers who deserve transparency about what they are purchasing.

The gaming industry must establish ethical guidelines prioritizing human creativity over cost-cutting automation. Industry associations and major publishers should commit to standards ensuring AI serves as a tool augmenting human artists rather than replacing them. This includes minimum requirements for human creative input in AI-assisted workflows and prohibitions on using AI to replicate distinctive artistic styles without authorization or collaboration. The progression from subtle AI use in Black Ops 6 to blatant Studio Ghibli imitation in Black Ops 7 demonstrates the risk of gradual normalization absent clear boundaries. The industry should implement certification programs identifying games developed with meaningful human creative involvement, allowing consumers to support human-centered development.

Publishers should be encouraged to partner with studios like Studio Ghibli for authentic collaborations rather than exploiting their aesthetic through AI imitation. Market mechanisms already show promise, as players successfully obtained refunds citing AI-generated content, demonstrating that consumer protection frameworks can provide recourse. Combined with reputational consequences and potential regulatory action, these pressures can incentivize responsible AI deployment that enhances rather than replaces human creativity.

Conclusion

The Call of Duty: Black Ops 7 controversy represents a turning point for the gaming industry. Activision’s deployment of AI-generated calling cards mimicking Studio Ghibli’s aesthetic raises fundamental questions about copyright protection, consumer transparency, and the future of creative labour. The legal perspective remains uncertain, with courts yet to definitively resolve whether training AI on copyrighted content constitutes infringement or whether AI outputs lacking human authorship deserve copyright protection. This uncertainty creates vulnerabilities for companies and consumers alike. Activision potentially charged premium prices for content existing in the public domain while exploiting Studio Ghibli’s reputation without authorization or compensation.

The controversy extends beyond legal technicalities to fundamental questions about the gaming industry’s values. A billion-dollar franchise resorting to AI-generated artwork rather than compensating human artists reflects a troubling prioritization of profit maximization over creative integrity. The progression from subtle AI use to blatant aesthetic imitation suggests a deliberate strategy of normalizing AI content through incremental acceptance. Without meaningful legal or market consequences, this approach will proliferate throughout the industry, accelerating the replacement of human creativity with automated content generation.

However, the significant backlash, successful consumer refunds, and regulatory attention demonstrate that resistance mechanisms exist. The Federal Trade Commission’s focus on AI-facilitated deception, emerging state disclosure laws, and ongoing copyright litigation signal that legal frameworks are beginning to address AI’s challenges. The path forward requires comprehensive reform: mandatory disclosure requirements, clear copyright standards for training data and AI outputs, and industry commitments to human-centered development. Only through this multifaceted approach can the law protect original creators, empower informed consumers, and ensure that artificial intelligence enhances rather than replaces human creativity in gaming and beyond.

[1] PC Gamer, ‘Call of Duty: Black Ops 7 under fire for using what sure looks like AI-generated Studio Ghibli-style calling card art’ (14 November 2025) https://www.pcgamer.com/games/call-of-duty/call-of-duty-black-ops-7-under-fire-for-using-what-sure-looks-like-ai-generated-studio-ghibli-style-calling-card-art/

[2] Ibid.

[3] Hitmarker, ‘Call of Duty: Black Ops 7 faces backlash over AI-generated calling card artwork’ (1 January 2025) https://hitmarker.net/news/call-of-duty-black-ops-7-faces-backlash-over-ai-generated-calling-card-artwork-1576825

[4] Paul Tassi, ‘Call of Duty: Black Ops 7’s Awful AI Use Is A Watershed Moment’ Forbes (15 November 2025). https://www.forbes.com/sites/paultassi/2025/11/15/call-of-duty-black-ops-7s-awful-ai-use-is-a-watershed-moment/

[5] TechCrunch, ‘Studio Ghibli and other Japanese publishers want OpenAI to stop training on their work’ (4 November 2025) https://techcrunch.com/2025/11/03/studio-ghibli-and-other-japanese-publishers-want-openai-to-stop-training-on-their-work/ accessed 16 February 2026.

[6] 17 USC § 102(a).

[7] US Copyright Office, Copyright and Artificial Intelligence: Part 2: Copyrightability (2025) 4-11.

[8] Ibid

[9] Congressional Research Service, ‘Generative Artificial Intelligence and Copyright Law’ (Library of Congress, 2024)

[10] PC Gamer, ‘Call of Duty: Black Ops 7 under fire for using what sure looks like AI-generated Studio Ghibli-style calling card art’ (14 November 2025) https://www.pcgamer.com/games/call-of-duty/call-of-duty-black-ops-7-under-fire-for-using-what-sure-looks-like-ai-generated-studio-ghibli-style-calling-card-art/

[11] Arnstein v Porter 154 F 2d 464 (2d Cir 1946).

[12] Andersen v Stability AI Ltd 23-cv-00201 (ND Cal 2024).

[13] Push Square, ‘Embarrassing AI Art Plagues Call of Duty: Black Ops 7 Launch’ (15 November 2025) https://www.pushsquare.com/news/2025/11/embarrassing-ai-art-plagues-call-of-duty-black-ops-7-launch

[14] Push Square, ‘Embarrassing AI Art Plagues Call of Duty: Black Ops 7 Launch’ (15 November 2025) https://www.pushsquare.com/news/2025/11/embarrassing-ai-art-plagues-call-of-duty-black-ops-7-launch

[15] Beebom, ‘Black Ops 7 Faces Backlash After Player Reports AI Calling Cards and Gets Refunded’ (15 November 2025) https://beebom.com/black-ops-7-faces-backlash-after-player-reports-ai-calling-cards-and-gets-refunded/

[16] NY S 8420-A/A 8887-B (Synthetic Performer Disclosure Bill) (passed June 2025).

[17] Defending Democracy from Deepfake Deception Cal Code Civ Proc § 35; Cal Elec Code § 20510.

[18] Activision Blizzard, Call of Duty: Black Ops 7 Steam Store Page (2025).

[19] Federal Trade Commission, Consumer Protection Guidance on AI-Generated Content (2024).

[20] New York Synthetic Performer Disclosure Bill; California Deceptive AI Content Labeling Act.

[21] Andersen v Stability AI Ltd 23-cv-00201 (ND Cal 2024).

[22] US Copyright Office, Copyright and Artificial Intelligence: Part 2: Copyrightability (2025).